Query catalog of a Power BI workspace with Azure Functions

Hi Power BI folks! This time we wanted to share some code that can help you if you manage a Power BI tenant, and you want to document the underlying queries of those datasets, for which we will create a query catalog of a Power BI workspace.

We ran into this need ourselves when we were troubleshooting a CPU-expensive query that was hitting one of our databases. As a result, we could not tell from the many possible datasets where it was coming from.

In cases like this, we know that a proper documentation of the Power BI service is useful. But we did not have one handy, and for us to create one ourselves, we decided to programmatically build a query catalog of a Power BI workspace to help keeping this documentation up-to-date.

Goal

Our goal today will be creating a C# Azure Function that will connect with the XMLA endpoint of a Power BI workspace, interact with the underlying Analysis Services model behind each dataset, and return a ZIP file with the query Expressions and TableExpressions of those datasets.

Requirements

- Power BI Premium-Per-User or Power BI Premium license

- Enabled XMLA endpoints for your Premium or PPU workspaces, either with Read or Read/Write access. If you have not these enabled, refer to this documentation

- An Azure subscription to deploy our C# Azure Function

The strategy to build our program will be:

- Connect to the XMLA endpoint providing the workspace name as a parameter

- Loop through the different model

Expressionsand TableExpressions of the dataset (a.k.a. databases) in the workspace - Collect the queries in Expressions and TableExpressions of each dataset, using a custom object

- Return a ZIP file with the queries collected in the custom object

Building the program

We will use Visual Studio Code to develop our function. You may also do it using Visual Studio.

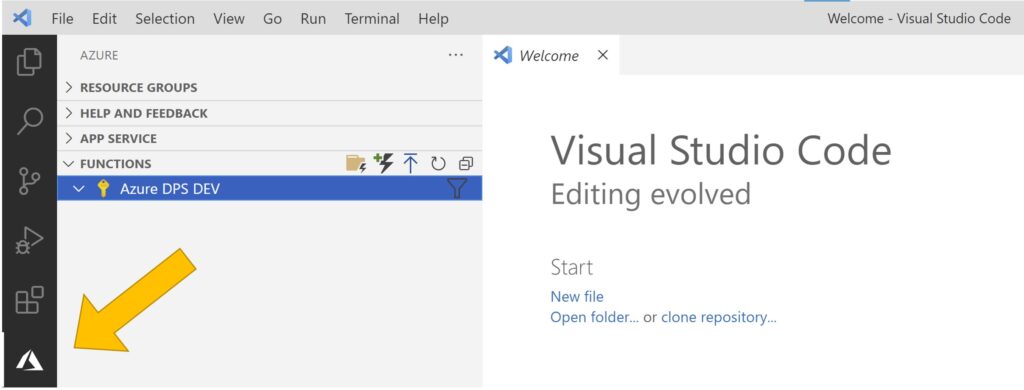

Firstly, let’s start by opening Visual Studio Code. Make sure you have installed the Azure Functions extension and that it is enabled:

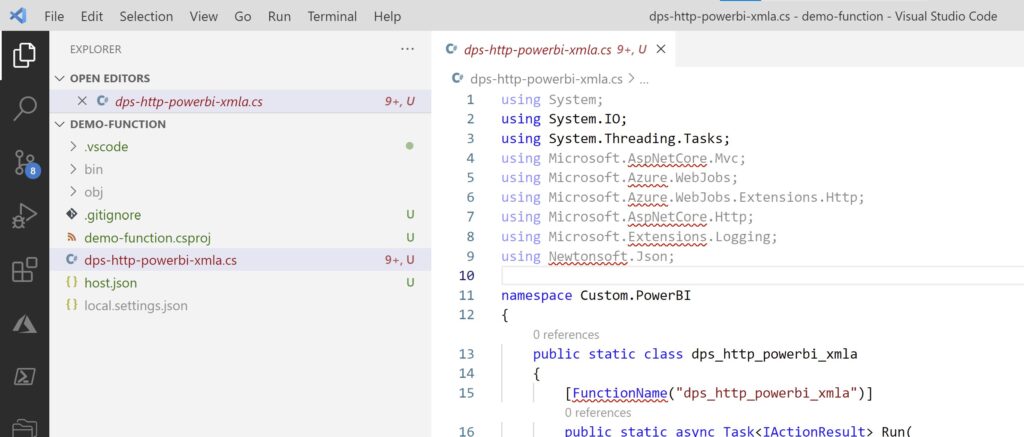

Then, we need to create an Azure Function project to host our function following the steps here, and specifying these options:

- Language: C#

- Runtime: .NET Core 3

- Template: HttpTrigger

- Function name: dps-http-powerbi-xmla

- Namespace: Custom.PowerBI

- AccessRights: Function

- Open in current window

A set of template files will appear in our working folder.

We need to add the required libraries to connect to our XMLA endpoint, which you can download here: MSOLAP libraries for Analysis Services.

However, another method that we found to be easier, is just to edit the .csproj file to include a reference to our Analysis Services libraries as shown on line 9, and then restore the dependencies by forcing a build on the project:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<TargetFramework>netcoreapp3.1</TargetFramework>

<AzureFunctionsVersion>v3</AzureFunctionsVersion>

<RootNamespace>demo_function</RootNamespace>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="Microsoft.NET.Sdk.Functions" Version="3.0.11" />

<PackageReference Include="Microsoft.AnalysisServices.NetCore.retail.amd64" Version="19.20.1" />

</ItemGroup>

<ItemGroup>

<None Update="host.json">

<CopyToOutputDirectory>PreserveNewest</CopyToOutputDirectory>

</None>

<None Update="local.settings.json">

<CopyToOutputDirectory>PreserveNewest</CopyToOutputDirectory>

<CopyToPublishDirectory>Never</CopyToPublishDirectory>

</None>

</ItemGroup>

</Project>

Then, let’s edit our HTTP-triggered Azure Function by adding the required using clauses and configuring the workspace_name parameter:

using System;

using System.Collections.Generic;

using System.IO;

using System.IO.Compression;

using System.Text;

using System.Threading.Tasks;

using Microsoft.AnalysisServices.Tabular;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

using Newtonsoft.Json;

namespace Custom.PowerBI

{

public static class CSHttpPowerBIXMLAProfiler

{

[FunctionName("download_powerbi_queries")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("C# 'download_powerbi_queries' function processed a request.");

string workspace_name = req.Query["workspace_name"];

var responseMessage = string.Empty;

if (string.IsNullOrEmpty(workspace_name))

{

responseMessage = "URI query parameter missing 'workspace_name'.";

return new BadRequestObjectResult(responseMessage);

}

}

}

}

Most importantly, we will:

- Build the connection string (we are hardcoding it in the script but a production deployment should secure it in Environment Variables or Azure Key Vault)

- Use the

AnalysisServices.Tabularlibrary to connect to the XMLA endpoint of the entire Power BI workspace - Loop through all the datasets in the Power BI workspace

- Get the expressions from the Tabular objects’ properties

string ConnectionString = $"Provider=MSOLAP;Data Source=powerbi://api.powerbi.com/v1.0/myorg/{workspace_name};User ID=myemail@host.com;Password=***";

var databases = new List<(string Name, List<(string Name, string Expression)> Expressions, List<(string TableName, string SourceType, string PartitionExpression)> TableExpressions)>();

using (var server = new Server())

{

server.Connect(ConnectionString);

foreach(Database db in server.Databases)

var databases = new List<(string Name, List<(string Name, string Expression)> Expressions, List<(string TableName, string SourceType, string PartitionExpression)> TableExpressions)>();

using (var server = new Server())

{

try

{

server.Connect(ConnectionString);

}

catch (Exception e)

{

log.LogDebug($"Unable to connect to Power BI with parameters provided: {e}");

return new BadRequestObjectResult("Unable to connect to Power BI with parameters provided");

}

foreach (Database database in server.Databases)

{

log.LogDebug($"Processing dataset: {database.Name}");

var model = database.Model;

var expressions = new List<(string Name, string Expression)>();

foreach (var expression in model.Expressions)

{

expressions.Add(

(expression.Name, expression.Expression)

);

}

var tableExpressions = new List<(string TableName, string SourceType, string PartitionExpression)>();

foreach (Table table in model.Tables)

{

var type = table.Partitions[0].Source.GetType();

if (type == typeof(MPartitionSource))

{

var source = (MPartitionSource)table.Partitions[0].Source;

tableExpressions.Add(

(table.Name, "MPartitionSource", source.Expression)

);

}

else if (type == typeof(CalculatedPartitionSource))

{

var source = (CalculatedPartitionSource)table.Partitions[0].Source;

tableExpressions.Add(

(table.Name, "CalculatedPartitionSource", source.Expression)

);

}

else if (type == typeof(QueryPartitionSource))

{

var source = (QueryPartitionSource)table.Partitions[0].Source;

tableExpressions.Add(

(table.Name, "QueryPartitionSource", source.Query)

);

}

else

{

log.LogDebug($"Table Expression: {table.Name}: non-supported Source type '{type}'.");

}

}

databases.Add(

(

Name: database.Name,

Expressions: expressions,

TableExpressions: tableExpressions

)

);

}

}

Last, we will generate the HTTP API response by converting our databases object into a usable ZIP file which will be returned after a successful call:

// ZIP Files

try

{

using (var memoryStream = new MemoryStream())

{

using (var archive = new ZipArchive(memoryStream, ZipArchiveMode.Create, true))

{

foreach (var database in databases)

{

foreach (var expression in database.Expressions)

{

byte[] bytes = Encoding.UTF8.GetBytes(expression.Expression);

var zipEntry = archive.CreateEntry($"{database.Name}/expressions/{expression.Name}.m",

CompressionLevel.Fastest);

using (var zipStream = zipEntry.Open())

{

zipStream.Write(bytes, 0, bytes.Length);

}

}

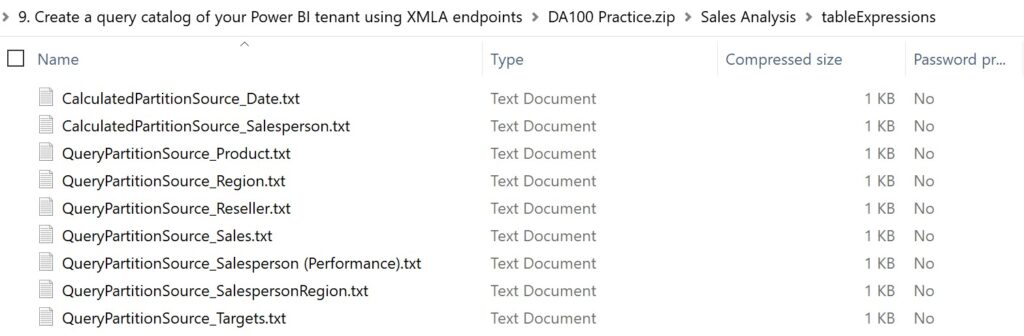

foreach (var tableExpression in database.TableExpressions)

{

byte[] bytes = Encoding.UTF8.GetBytes(tableExpression.PartitionExpression);

var zipEntry = archive.CreateEntry($"{database.Name}/tableExpressions/{tableExpression.SourceType}_{tableExpression.TableName}.txt",

CompressionLevel.Fastest);

using (var zipStream = zipEntry.Open())

{

zipStream.Write(bytes, 0, bytes.Length);

}

}

}

}

return new FileContentResult(memoryStream.ToArray(), "application/zip")

{

FileDownloadName = $"{workspace_name}.zip"

};

}

}

catch (Exception e)

{

responseMessage = "Something went wrong while processing the Power BI data.";

log.LogError(responseMessage, e);

return new ObjectResult("Something went wrong while processing the Power BI data.") { StatusCode = 500 };

}

You can download the entire code snippet on Gist from here.

Deploy to Azure

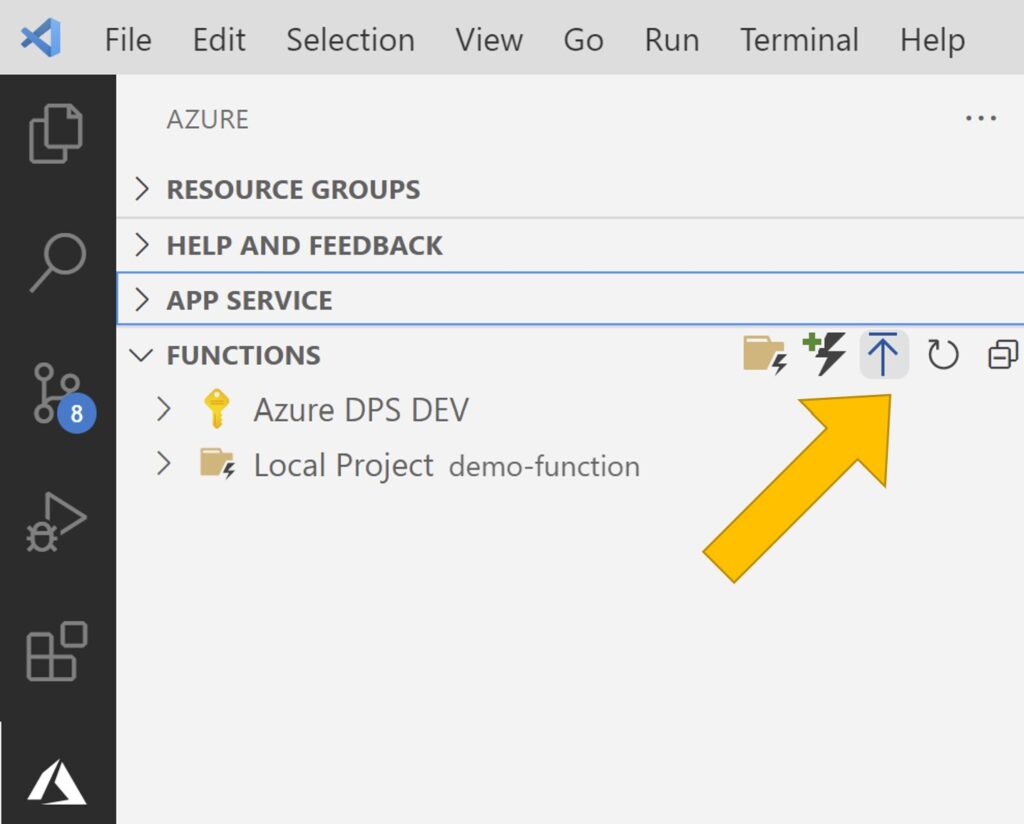

Let’s deploy this to Azure. Go to the Azure icon of the left bar and spot the “Deploy to Function App…” button of an arrow pointing up:

Then, specify the options in the Command pallette:

- Create a new Function App in Azure

- Type a Globally unique name for the new function app

- Runtime: .NET Core 3.1

- Select a region for the resources

- Pricing tier: Free try, or any other pricing tier of your preference, depending on the workloads you might need

Testing the solution

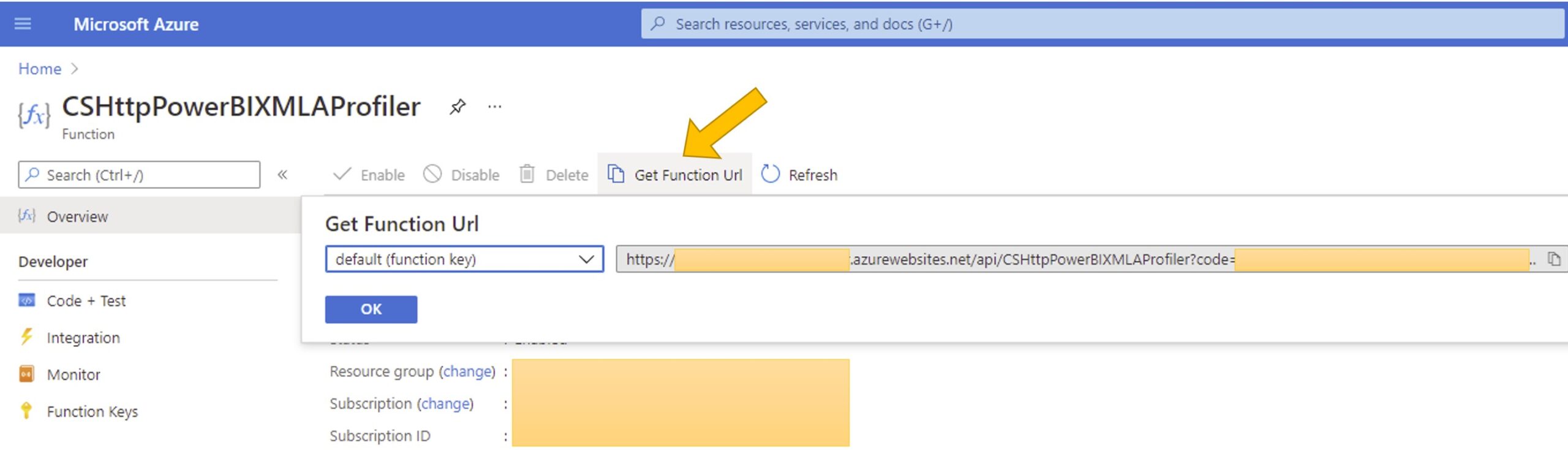

Firstly, go to your Azure portal and look for the Azure Function created.

Secondly, navigate the blade to: Functions > {Your function name} > Overview. You will see an option “Get function URL” from which you can copy the URL along with the SAS credentials to run it.

The URL will look like this: https://your-function-name.azurewebsites.net/api/CSHttpPowerBIXMLAProfiler?code=some_long_SAS_code

After that, just add this trailer to URL: &workspace_name=your_workspace_name,

Final URL form: https://your-function-name.azurewebsites.net/api/CSHttpPowerBIXMLAProfiler?code=some_long_SAS_code&workspace_name=your_workspace_name

Lastly, paste the URL in your browser and wait a few seconds until it returns the ZIP file of your workspace for download:

Voilà, here is our query data related to the Power BI workspace!

Summary

- Enable the XMLA endpoint Read permission on the target workspace

- Create an Azure Function with the code of this example

- Publish your function to Azure

- Hit the function URL while passing the workspace name parameter to get the ZIP file constructed

There’s several things that we can do with this query data, and that we could implement as extensions of the functionality. For instance:

- Parse the M queries containing SQL sources to extract the SQL query executed by your tables

- Enhance the API to get other types of expressions, such as Calculation Groups and DAX calculated tables

At this point, it becomes clear that the possibilities for integrations are many.

We hope you liked this post about how to create a query catalog of a Power BI workspace. Let us know your thoughts and comments 🙂 Also don’t forget to follow us on Twitter and subscribe to our emailsto stay tuned with more content. See you next time!